Hello there,

I am working with a Sugar Cloud customer on version 13.0 and they are asking what is the official way to cleanup all BPM SQL tables for old/orphan records.

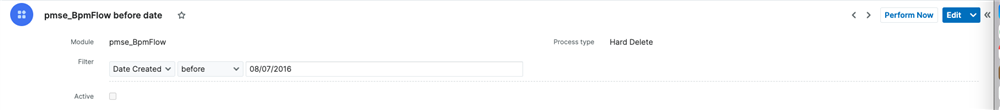

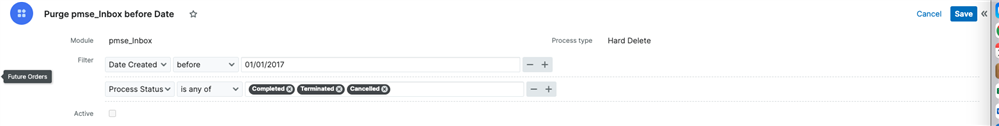

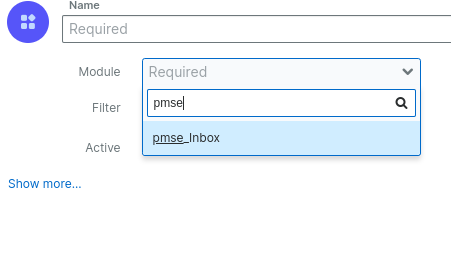

They already have a cleanup process for the pmse_inbox SQL table through the application tool.

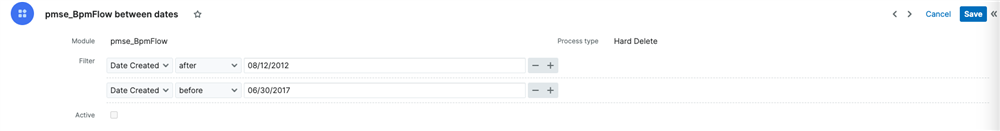

How can we efficiently, effectively and safely clean up SQL tables such as pmse_inbox, pmse_bpm_flow and pmse_bpm_form_action, on an ongoing basis, and also once-off?

Only on the SQL table pmse_bpm_form_action, the customer has about 3M records.

Are there existing best practices, or knowledge bases or tutorials on how to achieve this performance and cost-saving exercise correctly?

We also would like to not have this timeout the schedulers and therefore fail mid-way, especially for the initial cleanups.

Thank you for your help!